Understanding Machine Learning from Theory to Algorithm

Learn how to understand Machine Learning from theory to Algorithm complete course. This course is very useful for those who want to know machine learning in detail. This course is totally free of cost and easy to read in PDF format. In this course you will learn that how to build machine learning solutions. After reading this course you can build your own machine learning solutions. This course is very useful and helpful. By this course you will also learn how to build machine learning algorithms. Machine learning is the application of AI. Machine learning involves the study of computer algorithm. It mainly focusses on computer teaching to enhance its learning and experience from the data and it is automatically improved through the data. Machine learning is important because it learns from data and make decisions with less interference of humans. Machines learning decreases the risk of human error and increases the efficiency and accuracy. In order to get better understanding of machine learning let’s check out the following circumstances, we can see that in cyber fraud machines, Netflix, Amazon chooses useful parts of information and piece them together and make good decisions to get reliable results. For many companies, machine learning has become a crucial competitive differentiator.

ALGORITHMS in MACHINE LEARNING:

In Machine Learning “Algorithm” is a process that performed on data to create a model. In research papers, machine learning algorithms is defined by using pseudocode or linear algebra. you may compare the computational speed of one algorithm to another specific algorithms. Modern programming languages can be used to perform Machine learning algorithms. Many machine learning algorithms performed together and available in a library with a standard application programming interface (API). For example, various classification, regression, and clustering machine learning algorithms in Python is implemented by scikit -learn library.

Uses of Machine learning:

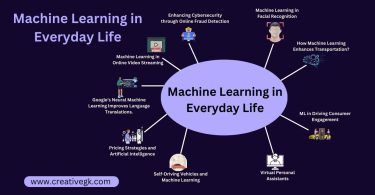

Machine learning is gaining traction at breakneck pace. Even without knowing about it, we are using it with Google maps, ALEXA etc.

Here are some most prominent real-world Machine learning application:

- Email Spam and Malware Filtering

- Self-driving cars

- Product recommendations

- Traffic prediction

- Speech Recognition

You can cover these topics in this course:

- In this book we are going to learn firstly Introduction: In introduction we learn about machine learning, importance of machine learning, how many types of machine learning, relations of machine learning with other fields, notation of machine learning.

- After introduction we are going to learn foundations of machine learning. In Foundations we learn the statistical learning Framework, Empirical Risk Minimization, Empirical Risk Minimization with Inductive bias.

- A Formal Learning Model Formal Learning Model we learn about PAC Learning

- By this course we will learn about Uniform Convergence Is Sufficient for Learnability and Finite Classes are Agnostic PAC Learnable

- The VC-Dimension. In this topic we are going to learn the VC-Dimension, Proof of Theorem, Infinite-Size Classes can be Learnable.

- Here we will discuss about Nonuniform Learnability and how to decrease structural Risk of nonuniform learnability

- Minimum Description Length and Occam’s Razor and also discuss different notions of learnability

- In this Book we also learn about Runtime of Learning. In this concept we know about the computational complexity of learning, Implementation of ERM Rule and hardness of learning

- In Linear Predictors chapter we learn about half spaces, Linear Regression, Logistic Regression

- In Boosting we know about Weak Learnability, AdaBoost, Linear Combinations of Base Hypotheses

- AdaBoost for Face Recognition

- In Model Selection and Validation chapter, we learn about selection of Model using SRM, if learning fails what we need, and also discuss validation

- In Convex Learning Problems, we learn Convexity, Lipschitz Ness, and Smoothness, discuss the problems about Convex Learning Problems and Surrogate loss functions

- In this Book we also learn about Regularization and Stability, in which we study Regularization of Loss Minimization, Tikhonov regularization as a Stabilizer also about Controlling the Fitting-Stability Tradeoff

- In this Book we also discuss Stochastic Gradient Descent we learn Gradient Descent, Sub gradients Stochastic Gradient Descent

- In this Book we learn about Support Vector Machines in which we know about Margin and Hard-SVM, Soft-SVM and Norm Regularization

- Optimality Conditions and Support Vectors

- Duality we learn about Implementation of Soft-SVM by using SGD

- In this Book learn Kernel Methodism which we learn about, Embeddings into Feature Spaces, the Kernel Trick and Implementation of Soft-SVM with Kernels

- Learn Multiclass, Ranking, and Complex Prediction Problems, One-versus-All and All-Pairs Linear Multiclass Predictors, Structured Output Prediction and Ranking

- Decision Trees and Sample Complexity, Decision Tree Algorithms and Random Forests

- Neural Networks in which we learn Feedforward Neural Networks, Learning Neural Networks and the expressive power of Neural Networks. The Sample Complexity of Neural Networks, and SGD and Backpropagation

- Here you will learn about Online Learning, Online Classification in the Realizable Case, Online Classification in the Unrealizable Case Online Convex Optimization and The Online Perceptron Algorithm

- By this book you will learn about Clustering, Linkage-Based Clustering Algorithms, k-Means and Other Cost Minimization Clustering, Spectral Clustering and a high level view of Clustering.